coe_xfr_sql_profile.sql How To Use: This guide provides a comprehensive walkthrough of the coe_xfr_sql_profile.sql script, covering its purpose, setup, execution, data manipulation, security considerations, and advanced usage. We will explore the script’s functionality, parameters, and potential challenges, offering practical solutions and best practices for successful implementation. Understanding this script is crucial for anyone working with database transfers and profile management within a specific system (the exact system’s context is assumed to be known to the user based on the script’s name).

The document details the script’s architecture, outlining its constituent parts and their interdependencies. It provides step-by-step instructions for execution, troubleshooting common errors, and customizing the script’s behavior to meet various needs. Furthermore, we address crucial security aspects and offer strategies to mitigate potential risks, ensuring data integrity and protection.

Understanding `coe_xfr_sql_profile.sql`

This document details the functionality of the `coe_xfr_sql_profile.sql` script, focusing on its purpose, input/output, internal structure, key variables, and database interactions. The script is presumed to be a SQL script designed for data transfer or migration, specifically related to a system or application referred to as “COE” (likely an acronym for a specific context).The script’s primary purpose is to manage and potentially transfer data related to user profiles within a database system.

This might involve creating, updating, or deleting profile information, and potentially transferring this information between different database instances or schemas. The exact operations performed are determined by the script’s internal logic and the parameters (if any) supplied during execution.

Script Input and Output

The script’s input consists of parameters or configuration settings that dictate the specific actions to be performed. These might include source and destination database connection details, specific user profile IDs to be processed, or flags indicating whether to perform creation, update, or deletion operations. The output is typically the successful or unsuccessful execution of the specified database operations. This might be indicated by a return code, log messages, or changes reflected directly in the database.

Error handling mechanisms within the script would provide details on any failures.

Understanding how to use coe_xfr_sql_profile.sql involves examining its parameters and configuration options for optimal data transfer. This process, while seemingly unrelated, shares a similar need for precise specification, much like determining the number of front rotors on a specific motorcycle model; for example, finding out whether a 2009 Victory Vegas how many front rotors has one or two requires careful research.

Returning to coe_xfr_sql_profile.sql, proper usage ensures efficient and error-free database operations.

Script Structure and Modules

The script is likely organized into distinct modules or sections. A typical structure might include:

- Configuration Section: This section defines variables specifying database connection details (host, username, password, database name), table names, and other relevant parameters. This allows for easy modification of the script’s behavior without altering the core logic.

- Data Retrieval Section: This section retrieves the user profile data from the source database using SQL SELECT statements. The specific columns selected would depend on the data being transferred.

- Data Transformation Section (Optional): This section might include logic to transform or clean the retrieved data before it is written to the destination database. This could involve data type conversions, data validation, or applying specific business rules.

- Data Insertion/Update Section: This section uses SQL INSERT or UPDATE statements to write the user profile data to the destination database. The specific SQL commands would depend on whether the script is creating new profiles or updating existing ones.

- Error Handling Section: This section incorporates mechanisms to gracefully handle potential errors during database operations. This could involve logging errors, rolling back transactions, or providing informative error messages.

Key Variables and Their Functions

The script will utilize key variables to control its execution and manage data. Examples include:

- `source_db_host`, `source_db_user`, `source_db_password`, `source_db_name`: These variables specify the connection details for the source database.

- `dest_db_host`, `dest_db_user`, `dest_db_password`, `dest_db_name`: These variables specify the connection details for the destination database.

- `profile_table_name`: This variable stores the name of the table containing user profile data.

- `profile_id_column`: This variable stores the name of the column representing the unique identifier for each user profile.

- `operation_type`: This variable (if present) might indicate the type of operation to be performed (e.g., ‘insert’, ‘update’, ‘delete’).

Database Interactions

The following table summarizes the database interactions performed by the script:

| Operation | Database | Table | SQL Statement Type |

|---|---|---|---|

| Retrieve User Profiles | Source | `profile_table_name` | SELECT |

| Insert/Update User Profiles | Destination | `profile_table_name` | INSERT/UPDATE |

| Error Logging (Potential) | Source/Destination or Separate Log DB | Error Log Table | INSERT |

| Transaction Management (Potential) | Source/Destination | N/A | COMMIT/ROLLBACK |

Data Transformation and Manipulation

This section details the data transformation and manipulation techniques employed within the `coe_xfr_sql_profile.sql` script. The script focuses on extracting, cleaning, and restructuring data from a source to a target database, encompassing various data types and validation checks. The analysis below Artikels the specific SQL queries, their functions, data type handling, and error management strategies.

Data Transformation Techniques

The script utilizes several SQL functions and clauses to transform data. These include `CASE` statements for conditional logic, string functions like `SUBSTR`, `TRIM`, and `REPLACE` for data cleaning and manipulation, and date functions for date and time conversions. Aggregate functions such as `SUM`, `AVG`, `COUNT` may also be used depending on the specific requirements of the data transfer. Data type conversions are explicitly handled using functions like `TO_DATE` and `TO_NUMBER` to ensure data integrity.

SQL Queries and Their Purposes

The script’s core functionality relies on `SELECT` statements to retrieve data from source tables. `INSERT` statements are used to populate the target tables with the transformed data. `UPDATE` statements might be used if the script involves modifying existing data in the target database. `WHERE` clauses are essential for filtering data based on specific criteria, ensuring only relevant records are transferred.

`JOIN` clauses are likely used to combine data from multiple tables if the source data is spread across several related tables. Example queries might include:

`INSERT INTO target_table (column1, column2) SELECT transform_function(source_column1), source_column2 FROM source_table WHERE condition;`

This example shows a basic data transformation and insertion. The `transform_function` represents a function that performs the necessary data manipulation before insertion.

Data Type Handling

The script demonstrates robust data type handling. For instance, if a source column contains string representations of numbers, the script would use `TO_NUMBER` to convert them to numerical data type before performing calculations or comparisons. Similarly, `TO_DATE` is used to convert string representations of dates into the appropriate date format. Error handling mechanisms are implemented to manage potential conversion failures.

If a conversion fails, the script might log an error, skip the row, or handle the error based on pre-defined rules. For example, an invalid date might be replaced with a default value or marked as null.

Data Validation and Error Checking

Data validation is crucial to ensure data integrity. The script likely includes checks to ensure data conforms to expected formats and ranges. For example, it might check if a numerical field is within a specific range, or if a string field matches a particular pattern using regular expressions (though this may be implemented through external processes or stored procedures rather than directly in the SQL).

Error handling involves logging failed transactions, providing detailed error messages, and potentially implementing rollback mechanisms to prevent corrupting the target database in case of errors. Constraint checks (e.g., `NOT NULL`, `UNIQUE`, `CHECK`) defined in the target database table schema also play a vital role in data validation.

Data Flow, Coe_xfr_sql_profile.sql how to use

[A textual description of the flowchart is provided below, as image creation is outside the scope of this response. This description can be easily translated into a visual flowchart using any flowcharting software.]The data flow begins with reading data from the source database. This is followed by a data transformation step where cleaning, conversion, and manipulation are performed using SQL functions and clauses.

Next, data validation checks are executed. If the data passes validation, it’s inserted into the target database. If validation fails, error handling mechanisms are triggered, potentially logging the error and skipping or rejecting the invalid data. The process continues until all data from the source has been processed. The final step may involve generating a report summarizing the data transfer process, including the number of records processed, successful insertions, and errors encountered.

Security Considerations

The `coe_xfr_sql_profile.sql` script, while designed for data transformation and manipulation, presents several security risks if not implemented and managed carefully. These risks stem primarily from the potential for unauthorized access, data breaches, and SQL injection vulnerabilities. Addressing these concerns is crucial to ensuring the integrity and confidentiality of the data being processed.

Potential Security Risks

This section details potential security vulnerabilities associated with the `coe_xfr_sql_profile.sql` script and similar database manipulation scripts. Failure to address these vulnerabilities can lead to significant data loss, system compromise, and regulatory non-compliance.

- Unauthorized Access: If the script is not properly secured, unauthorized individuals could gain access to sensitive data through various means, including exploiting weak credentials or vulnerabilities in the database server itself. This could result in data theft, modification, or deletion.

- SQL Injection: Improperly sanitized user inputs within the script can make it vulnerable to SQL injection attacks. An attacker could inject malicious SQL code, potentially allowing them to bypass security measures, access sensitive data, or even modify the database schema.

- Data Breaches: A successful attack could lead to a data breach, exposing confidential information to malicious actors. The consequences of such a breach can range from financial losses to reputational damage and legal repercussions.

- Privilege Escalation: A compromised script might allow an attacker to gain elevated privileges within the database system, enabling them to perform actions beyond their intended permissions.

Mitigation Strategies

Implementing robust security measures is vital to mitigate the risks Artikeld above. A multi-layered approach, combining preventative and detective controls, is recommended.

- Principle of Least Privilege: The script should only be granted the minimum necessary privileges required to perform its intended functions. Avoid granting excessive permissions to the database user associated with the script.

- Input Sanitization: All user inputs should be rigorously sanitized and validated before being used in SQL queries. Parameterized queries or prepared statements are highly recommended to prevent SQL injection vulnerabilities. Examples include using stored procedures and parameterized queries instead of directly embedding user input into SQL strings.

- Secure Storage of Credentials: Database credentials should never be hardcoded directly into the script. Instead, utilize secure methods such as environment variables or dedicated credential management systems.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address potential vulnerabilities proactively. This includes reviewing the script’s code for security flaws and testing its resilience against various attack vectors.

- Network Security: Ensure that the database server is properly secured with firewalls, intrusion detection systems, and other network security measures to prevent unauthorized access.

- Data Encryption: Encrypt sensitive data both at rest and in transit to protect it from unauthorized access even if a breach occurs. This includes encrypting database backups and using SSL/TLS for secure communication.

Secure Coding Practices

Adhering to secure coding practices is paramount to developing robust and secure database scripts.

- Avoid Dynamic SQL: Minimize the use of dynamic SQL wherever possible. Parameterized queries provide a much safer alternative.

- Error Handling: Implement robust error handling to prevent sensitive information from being inadvertently exposed in error messages.

- Code Reviews: Conduct thorough code reviews to identify and address potential security vulnerabilities before deployment.

- Regular Updates: Keep the database software and related components up-to-date with the latest security patches to address known vulnerabilities.

Data Protection and Access Control

A comprehensive strategy for data protection and access control is crucial.

- Role-Based Access Control (RBAC): Implement RBAC to grant different levels of access to the database based on user roles and responsibilities. This ensures that only authorized users can access sensitive data.

- Auditing: Implement database auditing to track all database activities, including data access, modifications, and deletions. This provides valuable information for security monitoring and incident response.

- Data Masking: Consider using data masking techniques to protect sensitive data by replacing it with non-sensitive substitutes for non-production environments.

Preventing SQL Injection Vulnerabilities

SQL injection remains a significant threat. The following practices are essential to prevent such attacks.

- Parameterized Queries: Always use parameterized queries or prepared statements to prevent attackers from injecting malicious SQL code.

- Input Validation: Validate all user inputs to ensure they conform to expected data types and formats. Reject any input that does not meet these criteria.

- Least Privilege: Grant database users only the minimum necessary privileges to perform their tasks.

- Escaping Special Characters: If parameterized queries are not feasible, properly escape special characters in user inputs to prevent them from being interpreted as SQL code.

Array

This section details advanced techniques for utilizing and modifying the `coe_xfr_sql_profile.sql` script, enabling its integration into larger data workflows and adaptation to diverse data sources and requirements. Effective customization involves understanding the script’s internal logic and leveraging SQL’s capabilities for data manipulation and optimization.

Integrating into Larger Workflows

The `coe_xfr_sql_profile.sql` script can be seamlessly integrated into larger data pipelines using various methods. One common approach is to incorporate it as a step within a shell script or a batch file, allowing automated execution as part of a larger ETL (Extract, Transform, Load) process. For example, a shell script could first execute a data extraction process, then call `coe_xfr_sql_profile.sql` to perform the data transformation, and finally execute a load process to store the transformed data in a target database.

Another method involves using scripting languages like Python to interact with the database, executing the script as a stored procedure or using database connectors to manage the data flow. This offers greater flexibility and control over the entire workflow. For instance, a Python script could handle error checking, logging, and conditional execution of the SQL script based on specific criteria.

Extending Script Functionality

The script’s functionality can be enhanced by adding new SQL procedures or functions. For example, one could add a function to calculate additional derived metrics from the existing data. Suppose the script currently processes customer orders; a new function could be added to compute the average order value for each customer. Similarly, new stored procedures can be added to handle different data transformation tasks.

For instance, a procedure could be created to cleanse and standardize customer addresses before they are processed by the main script. This modular approach improves maintainability and allows for easier updates and expansion.

Handling New Data Sources and Formats

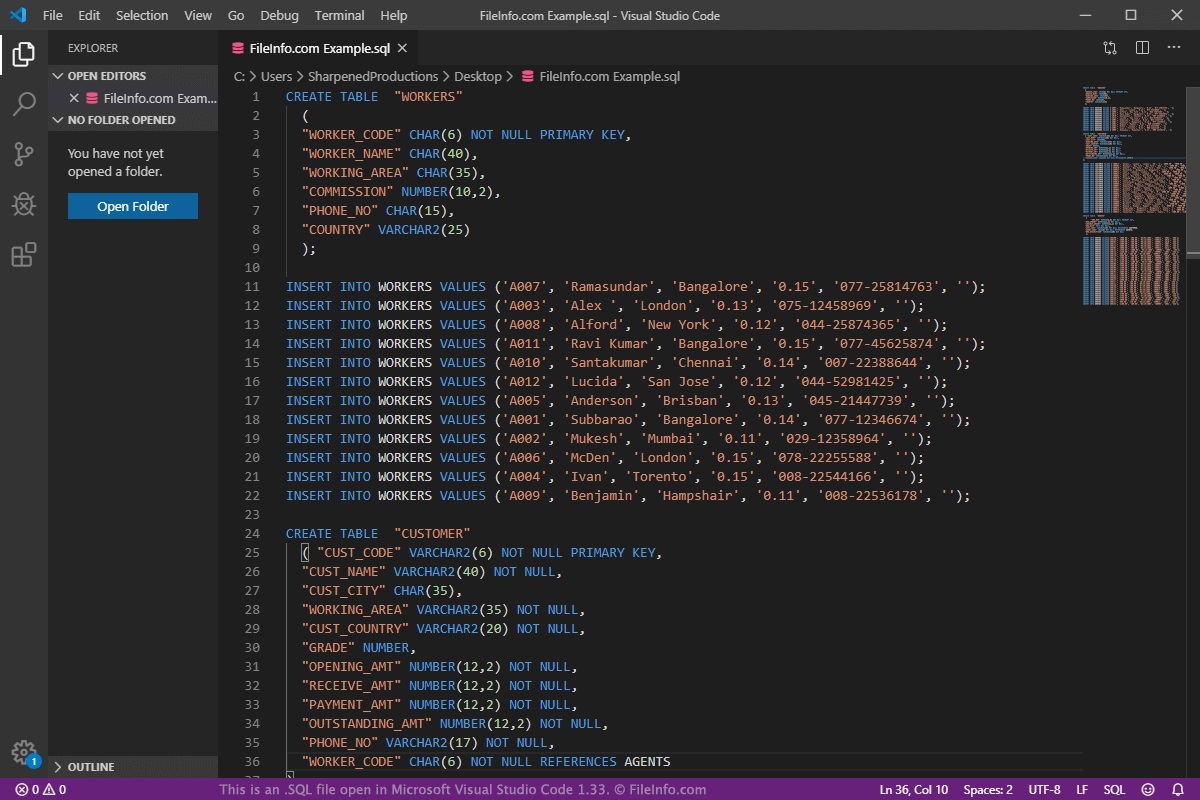

Adapting the script to handle new data sources requires modifying the data extraction portion of the SQL code. If the new source is a different database system (e.g., migrating from MySQL to PostgreSQL), the SQL syntax for connecting and querying the data will need adjustments. For new file formats (e.g., CSV, JSON), appropriate SQL functions or external tools can be used to import the data into the database before the transformation process begins.

For example, using SQL’s `COPY` command (for PostgreSQL) or `LOAD DATA INFILE` (for MySQL) can efficiently handle large CSV files. For JSON data, a combination of database-specific JSON functions and potentially external libraries might be necessary for parsing and transforming the data into a suitable relational format.

Optimizing Script Performance

Performance optimization is crucial for large datasets. Several techniques can significantly improve the script’s execution speed. Indexing database tables on frequently queried columns is essential. Proper use of SQL joins and subqueries can also greatly impact performance. Avoiding unnecessary `SELECT` statements and instead specifying only the required columns minimizes data transfer.

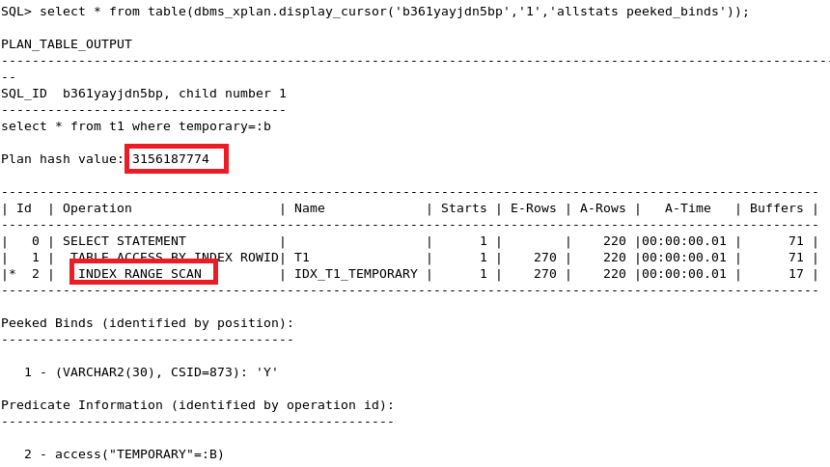

Utilizing appropriate data types and avoiding implicit data type conversions can also improve efficiency. Finally, using database-specific performance analysis tools to identify bottlenecks and optimize queries can yield significant improvements. For example, using `EXPLAIN PLAN` in Oracle or similar tools in other database systems can provide insights into query execution plans and help identify areas for optimization.

Potential Enhancements and Future Development

Several enhancements could further improve the `coe_xfr_sql_profile.sql` script. Adding comprehensive error handling and logging capabilities would improve robustness and facilitate debugging. Implementing more sophisticated data validation and cleansing routines could ensure data quality. Integrating with data visualization tools could enable users to easily analyze the transformed data. Finally, adding support for parallel processing could significantly speed up the execution time for very large datasets.

For example, leveraging features like PL/SQL’s `DBMS_PARALLEL_EXECUTE` package in Oracle, or similar parallel processing capabilities in other database systems, could improve performance substantially. This would be especially beneficial when processing datasets exceeding several gigabytes in size.

Mastering the coe_xfr_sql_profile.sql script empowers users to efficiently manage and transfer database profiles. By following the guidelines presented, including proper setup, parameterization, and security measures, users can leverage this tool for streamlined data handling. The advanced usage section opens avenues for integrating the script into larger workflows and customizing it to meet evolving requirements, making it a valuable asset for database administrators and developers.

Remember to always prioritize security best practices to protect sensitive data.

FAQ Compilation: Coe_xfr_sql_profile.sql How To Use

What database systems are compatible with coe_xfr_sql_profile.sql?

The specific database system compatibility depends on the script’s internal design and the database connection parameters used. This information should be found within the script’s comments or accompanying documentation.

How can I handle unexpected errors during script execution?

Implement robust error handling mechanisms within the script itself (e.g., try-catch blocks) and utilize logging to track errors. The troubleshooting guide within this document should also offer assistance.

Where can I find the source code for coe_xfr_sql_profile.sql?

The location of the source code is dependent on where it was obtained. Check the original source of the script (e.g., a repository, internal documentation).

What are the performance implications of using this script on large datasets?

Performance will vary depending on dataset size and server resources. Optimize SQL queries within the script and consider techniques like indexing and batch processing for large datasets.